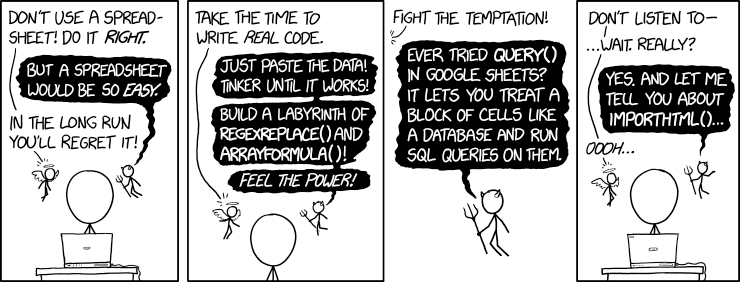

Don't ask how many of them use VBA.

"My brother once asked me if there was a function to produce a calendar grid from a list of dates in Google Sheets. I replied with a single-cell formula that took in a list of dates and outputted a calendar. It used SEQUENCE(), REGEXMATCH(), and a double-nested ARRAYFORMULA(), and it locked up the browser for 15 seconds every time it ran. I think he learned a lot about asking me things."

Back in the early 2000s, XML was all the rage. An unusual evolution from HTML, which itself was an evolution (devolution?) from SGML, XML was supposed to be a backlash against complexity. SGML originally grew from the publishing industry (for example, the original DocBook was an SGML language) and had a bunch of flexible parser features intended so not-too-technical writers could use it without really understanding how tags worked. It also had a bunch of shortcuts: for example, there's no reason to close the last <chapter> when opening a new <chapter>, because obviously you can't have a chapter inside a chapter, and so on. SGML was a bit of an organically-evolved mess, but it was a mess intended for humans. You can see a lot of that legacy in HTML, which was arguably just a variant of SGML for online publishing, minus a few features.

All that supposedly-human-friendly implicit behaviour became a real problem, especially when it came to making interoperable implementations (like web browsers). Now, don't get me wrong, the whole language parsing complaint was pretty overblown. Does browser compatibility really come down to exactly what I mean when I write some overlapping tags like <b>hello <u>cruel</b> world</u>? I mean, yes. But more important are semantics like which methods of javascript DOM objects take which sorts of parameters, or exist at all, and what CSS even means.

But we didn't know that then. Let's say all our compatibility problems were caused by how hard it is to parse HTML.

So some brave souls set out to solve the problem Once and For All. That was XML: a simplification of HTML/SGML with parsing inconsistencies removed, so that given any XML document, if nothing else, you always knew exactly what the parse tree should be. That made it a bit less human friendly (now you always had to close your tags), but most humans can figure out how to close tags, eventually, right?

Because strictness was the goal, Postel's Law didn't apply, and there was a profusion of XML validators, each more strict than the last, including magical features like silently downloading DTDs from the Internet on every run, and magical bugs like arbitrary code execution on your local machine or data leakage if that remote DTD got hacked.

(Side note about DTDs: those were used in SGML too. Interestingly, because of the implicit tag closing, it was impossible to parse SGML without knowing the DTD, because only then could you know which tags to nest and which to auto-close. In XML, since all tags need to be closed anyway, you can happily parse an document without even having the DTD: a very welcome simplification. So DTDs are rather vestigial, syntactically, and could have been omitted. (You can still happily ignore them whenever you use XML.) They still mean something - preventing legitimate parse trees from being accepted if they contain certain semantic errors - but that turns out to be quite a lot less important. Oh well.)

Unfortunately, XML was invented by a standards committee with very little self control, so after simplifying it they couldn't stop themselves from complexifying it again. But you could mostly ignore the added bits, except for the resulting security holes, and people mostly did, and they were mostly happy.

There was a short-lived attempt to convince every person on the entire Internet to switch from easy-to-write HTML to easy-to-parse XHTML (HTML-over-XML), but that predictably failed, because HTML gets written a few billion times a day and HTML parsers get written once or twice a decade, so writability beats parsability every time. But that's an inconsequential historical footnote, best forgotten.

What actually matters is this:

XML is the solution to every problem

Why do we still hear about XML today? Because despite failing at its primary goal - a less hacky basis for HTML - it was massively successful at the related job of encoding other structured data. You could grab an XML parser, write a DTD, and auto-generate code for parsing pretty much anything. Using XSL, you could also auto-generate output files from your auto-parsed XML input files. If you wanted, your output could even be more XML, and the cycle could continue forever!

What all this meant is that, if you adopted XML, you never needed to write another parser or another output generator. You never needed to learn any new syntax (except, weirdly, XSL and DTD) because all syntax was XML. It was the LISP of the 2000s, only with angle brackets instead of round ones, and not turing complete, and we didn't call it programming.

Most importantly, you never needed to argue with your vendor about whether their data file was valid, because XML's industry standard compliant validator tools would tell you. And never mind, since your vendor would obviously run the validator before sending you the file, you'd never get the invalid file in the first place. Life would be perfect.

Now we're getting to the point. XML was created to solve the interoperability problem. In enterprises, interoperability is huge: maybe the biggest problem of all. Heck, even humans at big companies have trouble cooperating, long before they have to exchange any data files. Companies will spend virtually any amount of money to fix interoperability, if they believe it'll work.

Money attracts consultants, and consultants attract methodologies, and metholologies attract megacorporations with methodology-driven products. XML was that catalyst. Money got invested, deployments got deployed, and business has never been the same since.

Right?

Okay, from your vantage point, seated comfortably here with me in the future, you might observe that it didn't all work out exactly as nice as we'd hoped. JSON came along and wiped out XML for web apps (but did you ever wonder why we fetch JSON using an XMLHttpRequest?). SOAP and XML-RPC were pretty unbearable. XML didn't turn out to be a great language for defining your build system configs, and "XML databases" were discovered to be an astonishingly abysmal idea. Nowadays you mostly see XML in aging industries that haven't quite gotten with the programme and switched to JSON and REST and whatever.

But what's interesting is, if you ask the enterprisey executive types whether they feel like they got their money's worth from the giant deployments they did while going Full XML, the feedback will be largely positive. XML didn't live up to expectations, but spending a lot of money on interoperability kinda did. Supply chains are a lot more integrated than they used to be. Financial systems actually do send financial data back and forth. RPCs really do get Remotely Called. All that stuff got built during the XML craze.

XML, the data format, didn't have all that much to do with it. We could have just as easily exchanged data with JSON (if it had existed) or CSV or protobufs or whatever. But XML, the dream, was a fad everyone could get behind. Nobody ever got fired for choosing XML. That dream moved the industry forward, fitfully, messily, but forward.

Blockchains

So here we are back in the present. Interoperability remains a problem, because it always will be. Aging financial systems are even more aged now than they were 15 or 20 years ago, and exchange data only a little better than before. We still write cheques and make "wire" transfers, so named because they were invented for the telegraph. Manufacturing supply chains are a lot better, but much of that improvement came from everybody just running the same one or two software megapackages. Legal contracts are really time consuming and essentially non-automated. Big companies are a little aggravated at having to clear their transactions through central authorities, not because they have anything against centralization and paying a few fees, but because those central authorities (whether banks, exchanges, or the court system) are really slow and inefficient.

We need a new generation of investment. And we need everyone to care about it all at once, because interoperability doesn't get fixed unless everybody fixes it.

That brings us to blockchains. Like XML, they are kinda fundamentally misguided; they don't solve a problem that is actually important. XML solved syntax, which turned out not to be the problem. Blockchains solve centralization, which will turn out not to be the problem. But they do create the incentive to slash and burn and invest a lot of money hiring consultants. They give us an excuse to forget everything we thought we knew about contracts and interoperability and payment clearing.

It's that forgetting that will allow progress. It'll be ugly, but it'll probably work.

Disclaimers

-

Bitcoin is like the XHTML of blockchains.

-

No, I don't think cryptocurrency investing is a good idea.

-

Blockchain math is actually rather useful, exactly to the extent that it is a (digitally signed) "chain of blocks," which was revolutionary long ago, when it was first conceived. As one example, git is a chain of blocks and many of its magical properties come directly from that. Chains of blocks are great.

But the other parts are all rather dumb. We can do consensus in many (much cheaper) ways. Most people don't want their transactions or legal agreements published to the world. Consumers actually like transactions to be reversible, within reason; markets work better that way. Companies even like to be able to safely unwind legal agreements sometimes when it turns out those contracts weren't the best idea.

I predict that in 20 years, we're going to have a lot of "blockchain" stuff in production use, but it won't be much like how people imagine it today. It'll have vestigial bits that we wonder why they're there, and it'll all be faintly embarrassing, like when someone sends you their old XML-RPC API and tells you to call it.

"Yeah, I know," they'll say. "But it was state of the art back then."

Click here to go see the bonus panel!

Hovertext:

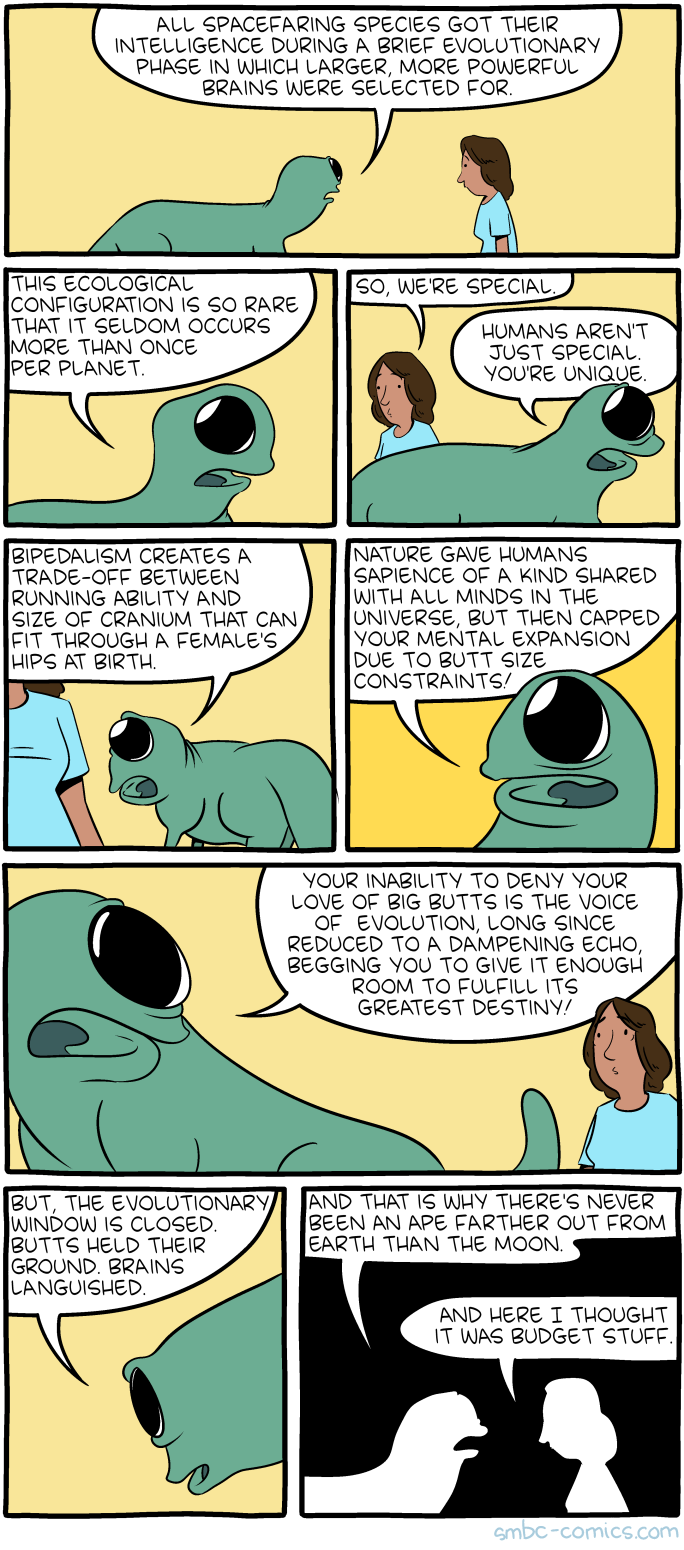

I like big butts and cannot lie, 'cause they allow a large brain to pass by.

Today's News:

new entry in Justin O’Beirne’s ongoing series

“We need for the machines to wake up, not in the sense of computers becoming self-aware, but in the sense of corporations recognizing the consequences of their behavior.”